Latency refers to the amount of time it takes for data to travel from its source to its destination in a computer network or a communication system. It is often measured in milliseconds (ms) and represents the delay between the initiation of a request and the receipt of a response.

It can be influenced by various factors, including the distance between the source and destination, the speed of the network connection, the number of network devices the data has to pass through, and the processing time at each step along the way.

In networking, there are different types of latency. Some common types include:

Network Latency: This is the time it takes for data to travel across a network from one point to another. It includes the propagation delay, which is the time it takes for a signal to travel through the physical medium, and the transmission delay, which is the time it takes to transmit the data over the network.

Round-Trip Latency: Also known as RTT, this measures the time it takes for a data packet to travel from the source to the destination and then back to the source. It includes the time for the request to reach the destination and the time for the response to return.

Application Latency: This refers to the delay experienced by an application or software system when processing data. It can include factors such as the time taken for data to be processed, queued, or for a response to be generated.

This is a critical factor in many real-time applications, such as online gaming, video conferencing, and high-frequency trading, where even slight delays can have a noticeable impact on user experience or system performance. Minimizing latency is often a goal in network design and optimization to ensure efficient and responsive communication.

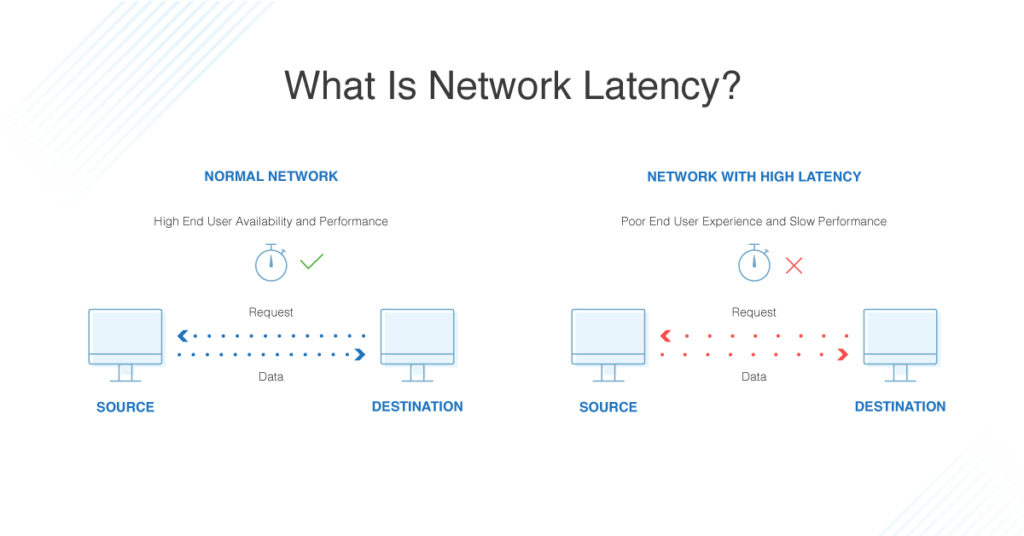

what is latency?

It can refers to the delay or time lag that occurs between the initiation of a request and the receipt of a response in a system or network. It measures the time it takes for data to travel from its source to its destination.

In computer networks, latency is typically measured in milliseconds (ms) and can be influenced by several factors. These include:

Propagation Delay: This is the time it takes for a signal to travel through the physical medium, such as a cable or fiber optic line. The speed of light limits the propagation delay, and it increases with the distance between the source and destination.

Transmission Delay: Transmission delay is the time taken to transmit the data over the network. It depends on the bandwidth of the network connection and the size of the data being transmitted.

Processing Delay: Processing delay occurs when the data passes through network devices such as routers, switches, or firewalls. These devices may need to perform various tasks, such as inspecting and modifying the data, which can introduce additional latency.

Latency is a critical factor in various applications and systems, especially those that require real-time communication or quick response times. For example, in online gaming, low latency is crucial to ensure minimal delays between player actions and their impact in the game. In video conferencing or VoIP (Voice over Internet Protocol) calls, low latency helps maintain smooth and natural conversations.

Reducing often a goal in network design and optimization to ensure efficient and responsive communication. This can be achieved through various techniques such as optimizing network infrastructure, minimizing network congestion, using faster network connections, and implementing efficient routing protocols.

What is latency internet?

Latency in the context of the internet refers to the delay or time it takes for data packets to travel from the sender to the receiver and back. It represents the time lag between the initiation of a request (such as clicking a link or sending a data packet) and the receipt of a response.

What causes Internet latency?

Distance: The physical distance between the source and destination of data transmission can affect it. Signals take time to travel, so longer distances generally result in higher latency.

Network Congestion: When the network is congested with high volumes of data traffic, the data packets may experience delays as they compete for limited network resources. Congestion can occur at various points in the network, including routers, switches, and internet service provider (ISP) networks.

Routing Inefficiencies: The routing process involves determining the most efficient path for data to travel between devices on a network. Inefficient routing can introduce additional hops or detours, leading to increased latency.

Network Equipment and Infrastructure: The quality and capacity of the network infrastructure and equipment, such as routers, switches, and cables, can impact latency. Outdated or overloaded equipment may introduce delays.

Bandwidth Limitations: Limited bandwidth can lead to latency, especially when transferring large amounts of data. If the available bandwidth is fully utilized, data packets may need to wait for their turn, resulting in increased latency.

Network Protocol Overhead: Network protocols add headers and other control information to data packets, which increases the packet size. This additional data can contribute to higher latency, especially if the network has limited bandwidth or if there are delays in processing the extra information.

Wireless Networks: Wireless connections introduce additional latency compared to wired connections. Factors such as signal interference, signal strength, and the distance between devices can affect latency in wireless networks.

Server Performance: The performance of the server or the destination device that is processing the data requests can impact on it. If the server is overloaded or experiencing high processing times, it can introduce delays in responding to requests.

Internet Service Provider (ISP): The quality and reliability of the internet connection provided by your ISP can affect latency. Some ISPs may have congested networks or suboptimal routing, leading to increased latency.

Other Factors: Other factors that can contribute to latency include network security measures (such as firewalls and encryption/decryption processes), the responsiveness of the destination application or server, and the efficiency of the client device’s hardware and software.

It’s important to note that latency can vary depending on the specific network configuration, geographical location, and the specific devices and software involved in the communication.

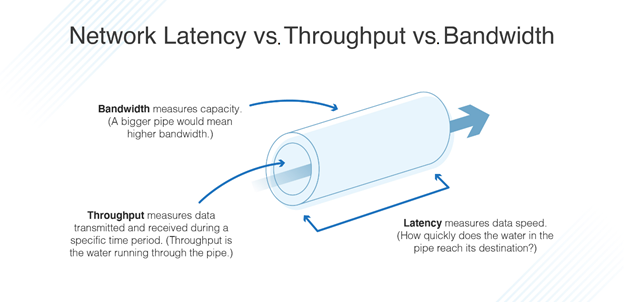

Network latency, throughput, and bandwidth

Network latency, throughput, and bandwidth are interconnected concepts in networking but represent different aspects of network performance. Here’s a brief explanation of each:

Network Latency: Network refers to the time delay that occurs when data packets travel from the source to the destination across a network. It measures the time taken for a packet to make a round trip from the sender to the receiver and back. Latency is typically measured in milliseconds (ms). Lower latency indicates faster response times and better real-time performance. Factors contributing to latency include distance, network congestion, routing inefficiencies, and processing delays.

Network Throughput: Throughput represents the amount of data that can be transmitted over a network within a given period. It is typically measured in bits per second (bps) or its multiples like kilobits per second (Kbps), megabits per second (Mbps), or gigabits per second (Gbps). Throughput reflects the capacity or speed of the network to transmit data. Higher throughput indicates a network’s ability to handle more significant amounts of data in a given time frame. Throughput can be affected by factors such as bandwidth limitations, network congestion, and network equipment performance.

Bandwidth: Bandwidth refers to the maximum data transfer rate of a network or a network connection. It represents the capacity or width of the data channel, determining how much data can be transmitted at a given time. Bandwidth is commonly measured in bits per second (bps) and its multiples. It defines the upper limit for network throughput. For example, a network connection with a bandwidth of 100 Mbps can theoretically transmit up to 100 megabits of data per second. Bandwidth is a crucial factor in determining the potential speed of data transmission but does not guarantee actual throughput. Actual throughput can be lower due to factors such as network congestion or limitations at the source or destination devices.

These factors collectively influence the performance and efficiency of network communication.

How can latency be reduced?

Use a Faster Network Connection: Upgrading to a faster internet connection with higher bandwidth can help reduce latency. Consider options such as fiber-optic or high-speed cable connections, which offer lower latency compared to traditional DSL or satellite connections.

Optimize Network Infrastructure: Evaluate and optimize your network infrastructure to minimize latency. Ensure that network devices, such as routers and switches, are up to date and properly configured. Use quality networking equipment that can handle higher traffic volumes efficiently.

Minimize Network Congestion: Reduce network congestion by managing and prioritizing network traffic. Use Quality of Service (QoS) mechanisms to prioritize critical applications and ensure they receive the necessary bandwidth. Implement traffic shaping or bandwidth throttling techniques to prevent any single application from monopolizing network resources.

Optimize Routing: Review and optimize the routing configuration to minimize the number of hops and detours that data packets have to take. Ensure that routing tables are up to date and use efficient routing protocols to enable faster and more direct data transmission.

Content Delivery Networks (CDNs): If you serve content or applications to users across different geographical locations, consider using a CDN. CDNs distribute content across multiple servers in various locations, allowing users to access data from a nearby server, thereby reducing latency.

Caching and Compression: Implement caching mechanisms to store frequently accessed data closer to users, reducing the need for repeated data retrieval from the source. Additionally, compress data where possible to reduce the amount of data transmitted over the network, thus reducing latency.

Optimize Application Performance: Optimize the performance of your applications by minimizing unnecessary data processing and optimizing algorithms. Consider implementing techniques like prefetching, parallel processing, and data compression within your applications.

Reduce Round-Trip Time (RTT): Minimize the number of round trips required between the source and destination. This can be achieved by implementing techniques such as connection pooling, keeping persistent connections open, and using protocols that minimize handshakes, such as HTTP/2.

Proximity: In some cases, physically locating servers closer to end users can significantly reduce latency. This can be achieved by deploying servers in data centers or edge locations that are geographically closer to the target audience.

Monitor and Troubleshoot: Regularly monitor network performance, latency, and potential bottlenecks. Use network monitoring tools to identify latency issues and troubleshoot them promptly. Analyze network traffic patterns to identify any anomalies or areas that need improvement.

What is latency internet?

In the context of gaming, latency refers to the delay or lag between a player’s input or action and the corresponding response or feedback from the game. It represents the time it takes for data to travel from the player’s device to the game server and back.

Low latency is crucial in gaming because it directly affects the responsiveness and real-time interaction between players and the game world. High latency can result in delayed or sluggish gameplay, negatively impacting the gaming experience. It can cause issues such as delayed character movements, delayed weapon firing, or unresponsive controls.

Latency in gaming is typically measured in milliseconds (ms), and even small differences in latency can be noticeable. For competitive multiplayer games, where split-second reactions are essential, minimizing latency is particularly important.

What is cas latency?

CAS latency (Column Address Strobe latency) is a timing parameter that measures the delay between the memory controller sending a column address to the memory module and the module returning the corresponding data. CAS is a specific timing measurement used in dynamic random-access memory (DRAM), which is the type of memory commonly used in computer systems.

This latency is expressed as a number, such as CAS 14 or CAS 16, and represents the number of clock cycles it takes for the memory module to return the requested data after the column address is provided. Lower CAS latency values indicate faster access times and better performance.

The CAS latency value is typically specified alongside other timing parameters, such as RAS (Row Address Strobe) latency, tRCD (Row to Column Delay), tRP (Row Precharge Time), and tRAS (Row Active Time). These timings collectively define the overall performance and efficiency of the memory module.

When choosing RAM modules for a computer system, it is common to consider both the frequency (speed) and CAS latency. Higher frequency RAM modules can provide faster data transfer rates, but they may also have higher CAS ,which can offset some of the speed advantages. Balancing the frequency and CAS latency according to the specific requirements and capabilities of the system is important for optimizing memory performance.

How can users fix latency on their end?

Use a Wired Connection: If you’re experiencing latency issues, switch from a wireless connection to a wired Ethernet connection. This is generally offer lower latency and more stability compared to wireless connections, which can be affected by signal interference and distance from the router.

Close Background Applications: Close any unnecessary applications and processes running in the background on your device. Some applications might consume network resources, leading to increased latency. Closing them can free up bandwidth and reduce latency.

Check for Malware or Viruses: Malware or viruses on your device can consume network resources and lead to increased latency. Regularly scan your device for malware and keep your antivirus software up to date.

Disable or Limit Bandwidth-Intensive Activities: Bandwidth-intensive activities such as large file downloads, streaming HD videos, or online gaming can consume significant network resources, leading to higher latency.

Clear Browser Cache: Clear the cache and cookies in your web browser. Accumulated cache and cookies can affect browsing speed and introduce latency. Clearing them can help improve the performance of web-based applications.

Update Network Drivers: Ensure that your network drivers are up to date. Outdated drivers can result in performance issues, including increased latency. Visit the manufacturer’s website or use appropriate software to update your network drivers.

Optimize Browser Settings: Adjusting certain browser settings can help improve latency. For example, disabling or adjusting browser extensions, disabling auto-updates, and limiting the number of open tabs can reduce network resource usage.

Use a Different DNS Provider: DNS resolution can impact the time it takes to access websites. Try using a different DNS provider, such as Google DNS or OpenDNS, which may provide faster and more reliable DNS resolution.

Restart Network Devices: Occasionally, network devices like routers or modems can encounter issues that increase latency. Restarting these devices can help resolve temporary glitches and improve performance.

Contact your Internet Service Provider (ISP): If you consistently experience high latency despite taking the above steps, contact your ISP. They can help diagnose and address any potential issues on their end that may be causing there problems.